Welcome! In this comprehensive guide, I'll walk you through OpenTelemetry (OTel) in a friendly and approachable way—what it is, why it matters, and how you can use it effectively. I'll break down technical terms like telemetry (data about your app's performance) and observability (understanding what's happening inside your system) to make everything crystal clear. We'll build a practical JavaScript example together, and I'll explain OpenTelemetry's architecture step by step. This guide is up-to-date as of September 2025, including the latest features like OpenTelemetry Collector v1.0.

1. What is OpenTelemetry?

OpenTelemetry is an open-source observability framework developed by the Cloud Native Computing Foundation (CNCF). It collects, processes, and sends telemetry data—like traces, metrics, and logs—from your applications to analysis tools (e.g., Prometheus, Jaeger, Datadog). Born in 2019 from the merger of OpenTracing and OpenCensus, it's now the go-to standard for observability.

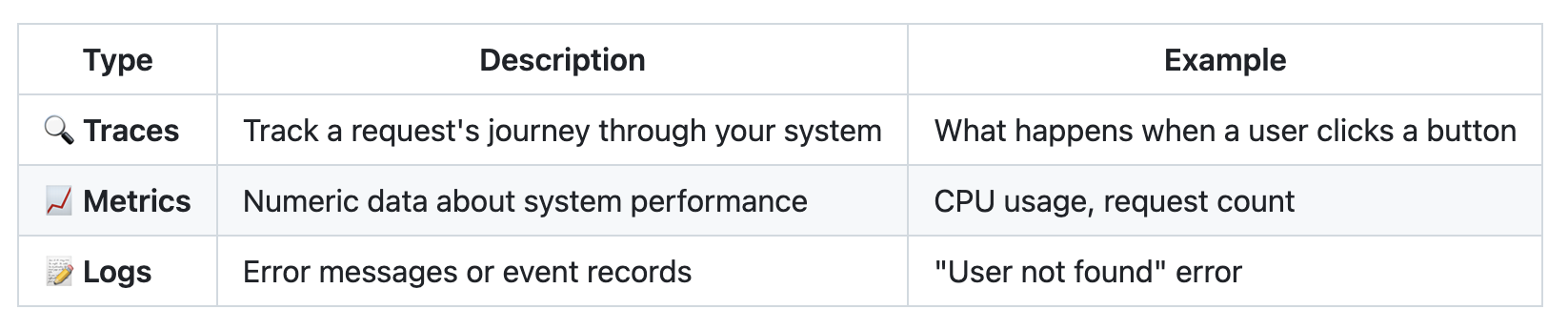

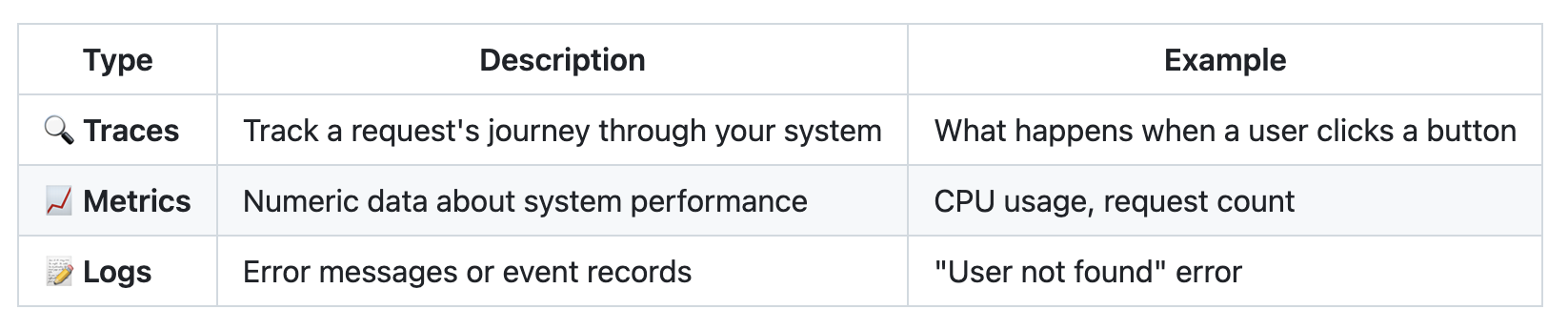

📊 The Three Pillars of Observability

Table 1. The three pillars of observability in OpenTelemetry: traces, metrics, and logs. This diagram illustrates how each data type provides different insights into application behavior and system performance. Source: Created by the author.

💡 Key Insight: OpenTelemetry gathers this data in a standardized way, preventing vendor lock-in and working seamlessly with multiple analysis tools.

🆕 2025 Update

At KubeCon EU 2025, OpenTelemetry was declared the "de facto standard." A new tool called Weaver automates telemetry. In JavaScript, traces and metrics are stable, while logs are still in development. Over 12 platforms (Splunk, AWS, Dynatrace) are fully compatible.